Nested monadic loops may cause space leaks

Published on

Consider the following trivial Haskell program:

main :: IO ()

main = worker

{-# NOINLINE worker #-}

worker :: (Monad m) => m ()

worker =

let loop = poll >> loop

in loop

poll :: (Monad m) => m a

poll = return () >> pollIt doesn’t do much — except, as it turns out, eat a lot of memory!

% ./test +RTS -s & sleep 1s && kill -SIGINT %1

751,551,192 bytes allocated in the heap

1,359,059,768 bytes copied during GC

450,901,152 bytes maximum residency (11 sample(s))

7,166,816 bytes maximum slop

888 MB total memory in use (0 MB lost due to fragmentation)

Tot time (elapsed) Avg pause Max pause

Gen 0 1429 colls, 0 par 0.265s 0.265s 0.0002s 0.0005s

Gen 1 11 colls, 0 par 0.701s 0.703s 0.0639s 0.3266s

INIT time 0.000s ( 0.000s elapsed)

MUT time 0.218s ( 0.218s elapsed)

GC time 0.966s ( 0.968s elapsed)

EXIT time 0.036s ( 0.036s elapsed)

Total time 1.223s ( 1.222s elapsed)

%GC time 79.0% (79.2% elapsed)

Alloc rate 3,450,267,071 bytes per MUT second

Productivity 21.0% of total user, 21.0% of total elapsedThese nested loops happen often in server-side programming. About a year ago, when I worked for Signal Vine, this happened to my code: the inner loop was a big streaming computation; the outer loop was something that would restart the inner loop should it fail.

Later that year, Edsko de Vries blogged about a very similar issue.

Recently, Sean Clark Hess observed something similar. In his case, the inner loop waits for a particular AMQP message, and the outer loop calls the inner loop repeatedly to extract all such messages.

So why would such an innocent-looking piece of code consume unbounded amounts of memory? To find out, let’s trace the program execution on the STG level.

Background: STG and IO

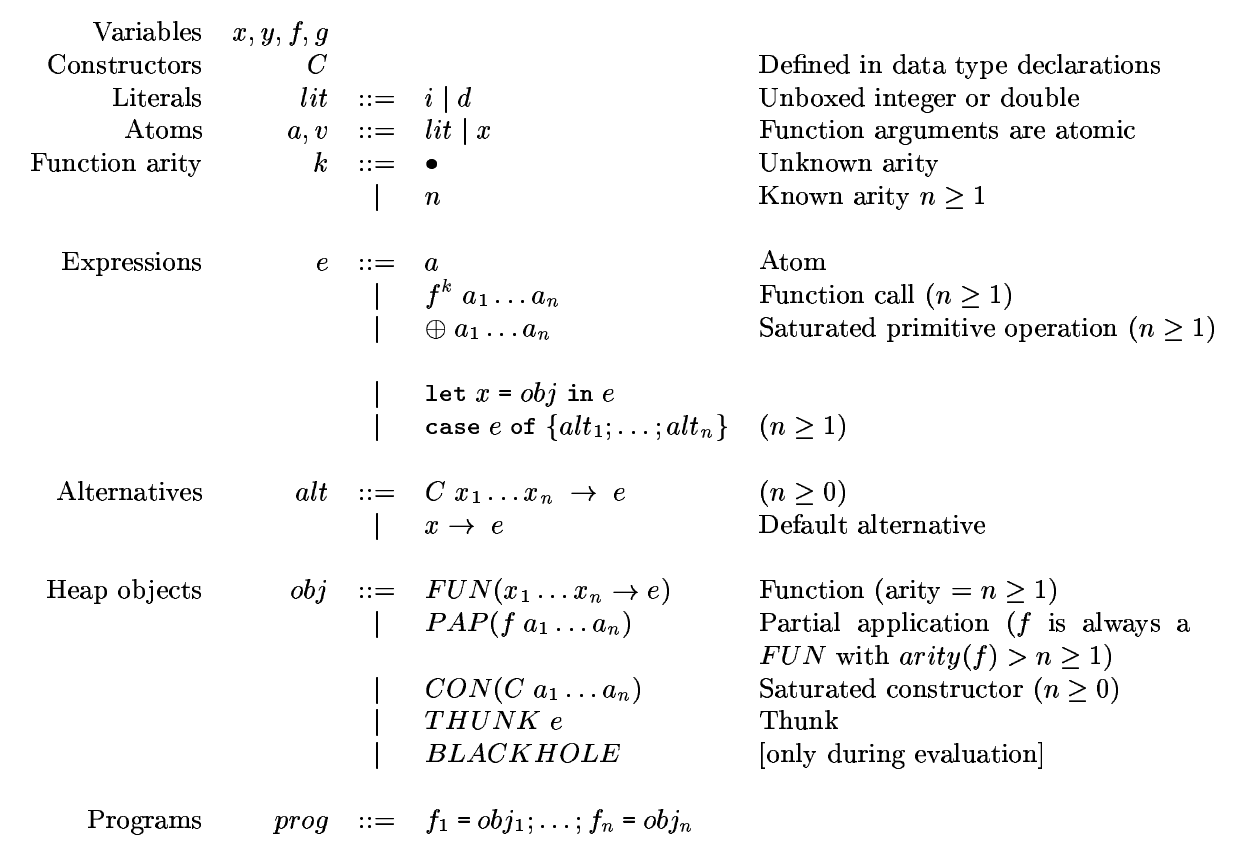

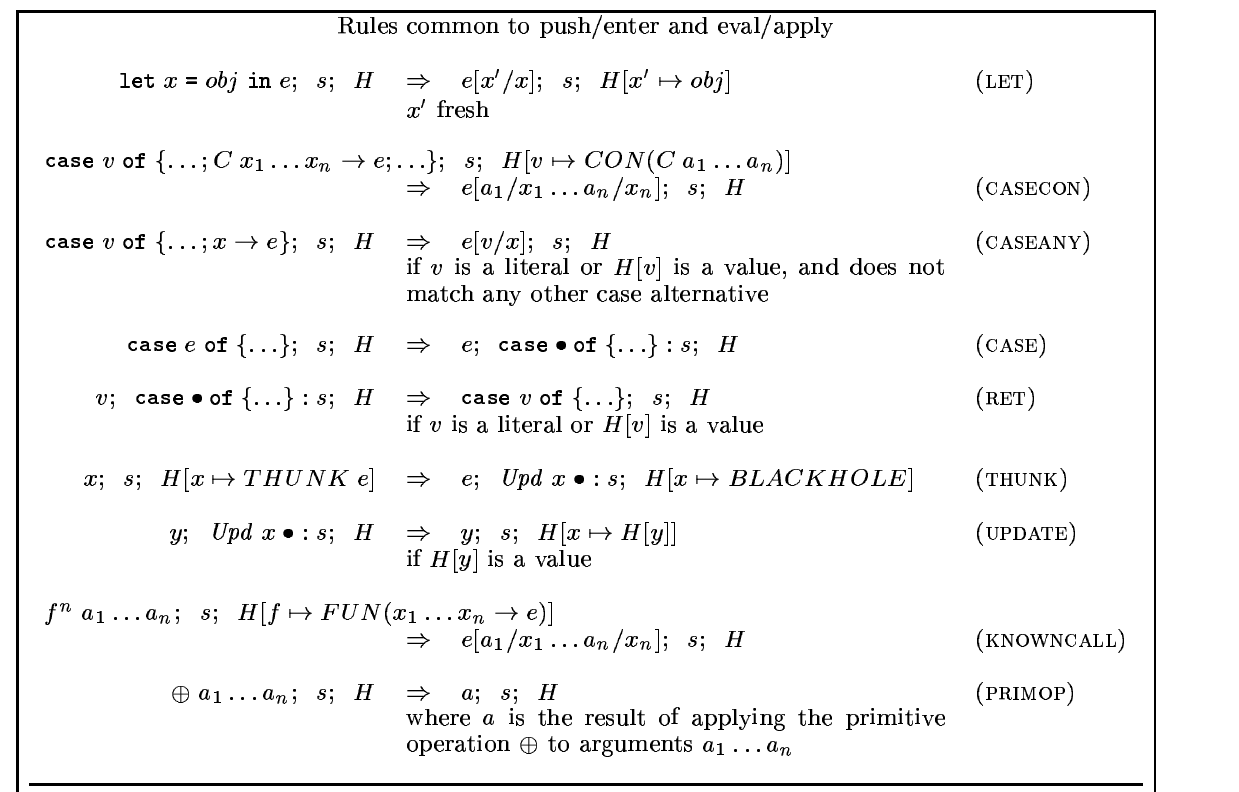

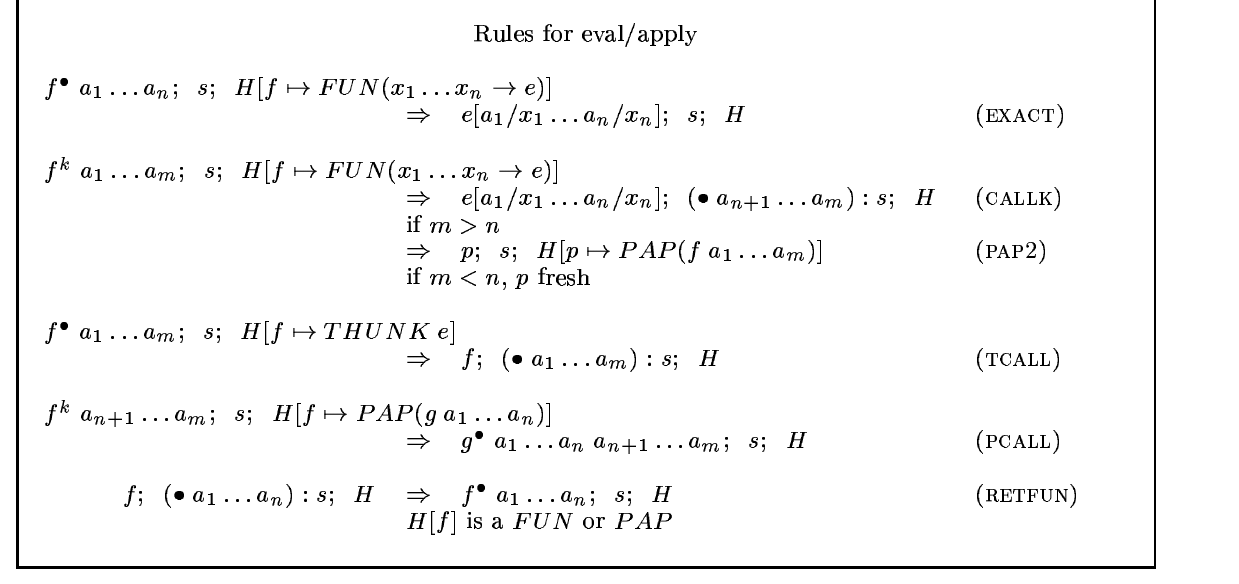

The runtime model of ghc-compiled programs is described in the paper Making a Fast Curry: Push/Enter vs. Eval/Apply for Higher-order Languages. Here is the grammar and the reduction rules for the quick reference.

It is going to be important that the IO type in GHC is a

function type:

newtype IO a = IO (State# RealWorld -> (# State# RealWorld, a #))Here are a few good introductions to the internals of

IO: from Edsko

de Vries, Edward

Z. Yang, and Michael

Snoyman.

Our program in STG

Let’s see now how our program translates to STG. This is a

translation done by ghc 8.0.1 with

-O -ddump-stg -dsuppress-all:

poll_rnN =

sat-only \r srt:SRT:[] [$dMonad_s312]

let { sat_s314 = \u srt:SRT:[] [] poll_rnN $dMonad_s312; } in

let { sat_s313 = \u srt:SRT:[] [] return $dMonad_s312 ();

} in >> $dMonad_s312 sat_s313 sat_s314;

worker =

\r srt:SRT:[] [$dMonad_s315]

let {

loop_s316 =

\u srt:SRT:[] []

let { sat_s317 = \u srt:SRT:[] [] poll_rnN $dMonad_s315;

} in >> $dMonad_s315 sat_s317 loop_s316;

} in loop_s316;

main = \u srt:SRT:[r2 :-> $fMonadIO] [] worker $fMonadIO;This is the STG as understood by ghc itself. In the notation of the fast curry paper introduced above, this (roughly) translates to:

main = THUNK(worker monadIO realWorld);

worker = FUN(monad ->

let {

loop = THUNK(let {worker_poll_thunk = THUNK(poll monad);}

in then monad worker_poll_thunk loop);

} in loop

);

poll = FUN(monad ->

let {

ret_thunk = THUNK(return monad unit);

poll_poll_thunk = THUNK(poll monad);

}

in then monad ret_thunk poll_poll_thunk

);monadIO is the record (“dictionary”) that contains the

Monad methods >>=, >>, and

return for the IO type. We will need return

and >> (called then here) in particular;

here is how they are defined:

returnIO = FUN(x s -> (# s, x #));

thenIO = FUN(m k s ->

case m s of {

(# new_s, result #) -> k new_s

}

);

monadIO = CON(Monad returnIO thenIO);

return = FUN(monad ->

case monad of {

Monad return then -> return

}

);

then = FUN(monad ->

case monad of {

Monad return then -> then

}

);STG interpreters

We could run our STG program by hand following the reduction rules listed above. If you have never done it, I highly recommend performing several reductions by hand as an exercise. But it is a bit tedious and error-prone. That’s why we will use Bernie Pope’s Ministg interpreter. My fork of Ministg adds support for unboxed tuples and recursive let bindings necessary to run our program.

There is another STG interpreter, stgi, by David Luposchainsky. It is more recent and looks nicer, but it doesn’t support the eval/apply execution model used by ghc, which is a deal breaker for our purposes.

We run Ministg like this:

ministg --noprelude --trace --maxsteps=100 --style=EA --tracedir leak.trace leak.stgMinistg will print an error message saying that the program hasn’t

finished running in 100 steps — as we would expect, — and it will also

generate a directory leak.trace containing html files. Each

html file shows the state of the STG machine after a single evaluation

step. You can browse these files here.

Tracing the program

Steps 0

through 16

take us from main to poll monadIO, which is

where things get interesting, because from this point on, only code

inside poll will be executing. Remember, poll

is an infinite loop, so it won’t give a chance for worker

to run ever again.

Each iteration of the poll loop consists of two phases.

During the first phase, poll monadIO is evaluated. This is

the “pure” part. No IO gets done during this part; we are just figuring

out what is going to be executed. The first phase runs up until

step

24.

On step

25, we grab the RealWorld token from the stack, and the second phase

— the IO phase — begins. It ends on step

42, when the next iteration of the loop begins with

poll monadIO.

Let’s look at the first phase in more detail. In steps 18 and 19, the let-expression

let {

ret_thunk = THUNK(return monad unit);

poll_poll_thunk = THUNK(poll monad);

}

in then monad ret_thunk poll_poll_thunkis evaluated. The thunks ret_thunk and

poll_poll_thunk are allocated on the heap at addresses

$3 and $4, respectively.

Later these thunks will be evaluated/updated to partial applications:

$3=PAP(returnIO unit) on step

35 and $4=PAP(thenIO $7 $8) on step

50.

We would hope that these partial applications will eventually be

garbage-collected. Unfortunately, not. The partial application

$1=PAP(thenIO $3 $4) is defined in terms of $3

and $4. $1 is the

worker_poll_thunk, the “next” instance of the

poll loop invoked by worker.

This is why the leak doesn’t occur if there’s no outer loop. Nothing

would reference $3 and $4, and they would be

executed and gc’d.

IO that doesn’t leak

The memory leak is a combination of two reasons. As we discussed above, the first reason is the outer loop that holds on to the reference to the inner loop.

The second reason is that IO happens here in two phases: the pure phase, during which we “compute” the IO action, and the second phase, during which we run the computed action. If there was no first phase, there would be nothing to remember.

Consider this version of the nested loop. Here, I moved NOINLINE to poll. (NOINLINE is needed because otherwise ghc would realize that our program doesn’t do anything and would simplify it down to a single infinite loop.)

main :: IO ()

main = worker

worker :: (Monad m) => m ()

worker =

let loop = poll >> loop

in loop

{-# NOINLINE poll #-}

poll :: (Monad m) => m a

poll = return () >> pollIn this version, ghc would inline worker into main and

specialize it to IO. Here is the ghc’s STG code:

poll_rqk =

sat-only \r srt:SRT:[] [$dMonad_s322]

let { sat_s324 = \u srt:SRT:[] [] poll_rqk $dMonad_s322; } in

let { sat_s323 = \u srt:SRT:[] [] return $dMonad_s322 ();

} in >> $dMonad_s322 sat_s323 sat_s324;

main1 =

\r srt:SRT:[r3 :-> main1, r54 :-> $fMonadIO] [s_s325]

case poll_rqk $fMonadIO s_s325 of _ {

(#,#) ipv_s327 _ -> main1 ipv_s327;

};Here, poll still runs in two phases, but

main1 (the outer loop) doesn’t. This program still

allocates memory and runs not as efficient as it could, but at least it

runs in constant memory. This is because the compiler realizes that

poll_rqk $fMonadIO is not computing anything useful and

there’s no point in caching that value. (I am actually curious what

exactly ghc’s logic is here.)

What if we push NOINLINE even further down?

main :: IO ()

main = worker

worker :: (Monad m) => m ()

worker =

let loop = poll >> loop

in loop

poll :: (Monad m) => m a

poll = do_stuff >> poll

{-# NOINLINE do_stuff #-}

do_stuff :: Monad m => m ()

do_stuff = return ()STG:

do_stuff_rql =

sat-only \r srt:SRT:[] [$dMonad_s32i] return $dMonad_s32i ();

$spoll_r2SR =

sat-only \r srt:SRT:[r54 :-> $fMonadIO,

r2SR :-> $spoll_r2SR] [s_s32j]

case do_stuff_rql $fMonadIO s_s32j of _ {

(#,#) ipv_s32l _ -> $spoll_r2SR ipv_s32l;

};

main1 =

\r srt:SRT:[r3 :-> main1, r2SR :-> $spoll_r2SR] [s_s32n]

case $spoll_r2SR s_s32n of _ {

(#,#) ipv_s32p _ -> main1 ipv_s32p;

};This code runs very efficiently, in a single phase, and doesn’t allocate at all.

Of course, in practice we wouldn’t deliberately put these NOINLINEs in our code just to make it inefficient. Instead, the inlining or specialization will fail to happen because the function is too big and/or resides in a different module, or for some other reason.

Arities

Arities provide an important perspective on the two-phase computation

issue. The arity of then is 1: it is just a record

selector. The arity of thenIO is 3: it takes the two

monadic values and the RealWorld state token.

Arities influence what happens at runtime, as can be seen from the

STG reduction rules. Because thenIO has arity 3, a partial

application is created for

thenIO ret_thunk poll_poll_thunk. Let’s change the arity of

thenIO to 2, so that no PAPs get created:

thenIO = FUN(m k ->

case m realWorld of {

(# new_s, result #) -> k

}

);(this is similar to how unsafePerformIO works). Now we

no longer have PAPs, but our heap is filled with the same exact number

of BLACKHOLEs.

More importantly, arities also influence what happens during compile

time: what shape the generated STG code has. Because then

has arity 1, ghc decides to create a chain of thens before

passing the RealWorld token. Let’s change (“eta-expand”) the

poll code as if then had arity 4,

without actually changing then or thenIO or

their runtime arities:

# added a dummy argument s

poll = FUN(monad s ->

let {

ret_thunk = THUNK(return monad unit);

poll_poll_thunk = THUNK(poll monad);

}

in then monad ret_thunk poll_poll_thunk s

);

# no change in then or thenIO

then = FUN(monad ->

case monad of {

Monad return then -> then

}

);

thenIO = FUN(m k s ->

case m s of {

(# new_s, result #) -> k new_s

}

);This code now runs in constant memory!

Therefore, what inlining/specialization does is that it lets the

compiler to see the true arity of a function such as

then. (Of course, it would also allow the compiler to

replace then with thenIO.)

Conclusions

Let me tell you how you can avoid any such space leaks in your code by following a simple rule:

I don’t know.

In some cases, -fno-full-laziness

or -fno-state-hack help. In this case, they don’t.

In 2012, I wrote why reasoning about space usage in Haskell is hard. I don’t think anything has changed since then. It is a hard problem to solve. I filed a ghc bug #13080 just in case the ghc developers might figure out a way how to address this particular issue.

Most of the time everything works great, but once in a while you stumble upon something like this. Such is life.

Thanks to Reid Barton for pointing out that my original theory regarding this leak was incomplete at best.